What do you know? I mean really know. You know that you exist. You know that you are reading this article. You know what physical pain is, as well as pleasure. You know what cold and heat feel like. You know that when you don’t sleep well, you feel tired the following day. You know that if you don’t eat for a day, you feel hungry. You know what anger feels like, or desire, jealousy, relief, love, satisfaction or joy. You know what an orange tastes like, and you know it tastes different from an apple. You know that illness and death are realities.

But there are also things that you may think you know, but actually do not. Perhaps the information came to you from other people – through conversation, the news, or the internet – and you assume that the people who provided it actually know. Or perhaps you grew up with certain beliefs, and you have simply retained those beliefs. Or perhaps you think about certain things a lot, and have come to certain conclusions about them, although you have no direct experience of those things. In each of these cases, you don’t really know; you just have an opinion. Not that there is anything wrong with opinions, as long as you remember that they are opinions, not knowledge.

The Buddha was very astute on this issue. He often pointed out that what someone thought they knew was really an opinion. Of course, his main interest was the path to Enlightenment. Consequently, all of his sayings that have come down to us relate to that path: in other words, to the spiritual life. However, much of what he says about the spiritual life is applicable to other areas of life too.

As a spiritual teacher, the Buddha exemplified this careful distinction between knowledge and opinion. Occasionally he would hear that one of his disciples was saying or doing something ‘unskilful’, something that might bring harm to the disciple and others. In such cases the Buddha always asked the disciple to come and see him, and to say if what was reported was true. Only once he had heard it directly from the source would he pass judgement and offer correction – a practice very different from the ‘court of public opinion’ that is common these days, where an accusation is taken to be true simply because someone has made it.

What Can We Rely On?

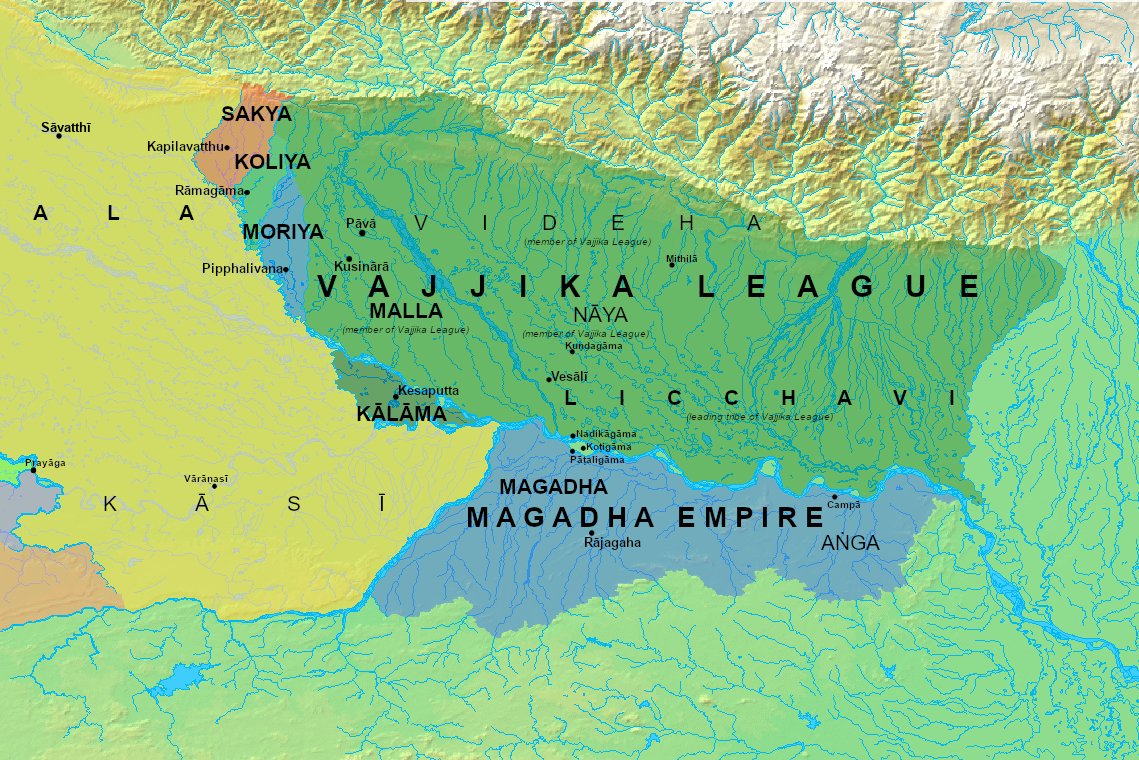

There is a well-known sutta (text) in which the Buddha makes clear the difference between knowledge and opinion. He is travelling, and comes to a town called Kesaputta, in which the Kālāma tribe live. Other spiritual teachers have passed through Kesaputta before the Buddha, and the Kālāmas tell him that those teachers

… explain and elucidate their own doctrines, but disparage, denigrate, deride, and denounce the doctrines of others. But then some other ascetics and brahmins come to Kesaputta, and they too explain and elucidate their own doctrines, but disparage, denigrate, deride, and denounce the doctrines of others. We are perplexed and in doubt … as to which of these good ascetics speak truth and which speak falsehood.

The Buddha first of all says that it is understandable and appropriate for them to feel doubt and uncertainty, and then he gives a list of ten things that they should not ‘go by’ or rely on:

… do not go by oral tradition, by lineage of teaching, by hearsay, by a collection of scriptures, by logical reasoning, by inferential reasoning, by reasoned cogitation, by the acceptance of a view after pondering it, by the seeming competence of a speaker, or because you think: ‘The ascetic is our guru.’1

There is a fair bit of overlap between these ten terms, and they have been translated in different ways, but I won’t attempt a detailed investigation of them because the overall meaning is clear. A friend of mine, Nagapriya, has written a more scholarly account,2 and in that article he points out that this list can usefully be divided into two categories: authority and reason. In the ‘reason’ group, we can put the middle four items (from ‘logical reasoning’ to ‘the acceptance of a view after pondering it’), and in the ‘authority’ group everything else.

It is not clear how the items grouped as ‘reason’ differ from one another, but again I don’t need to tease out the differences. The Buddha’s main point is clear: the Kālāmas can’t rely on whatever they hear from others, even if those others seem to know what they are talking about. What is more, the Kālāmas can’t even rely on whatever they have reasoned for themselves. What then can they rely on? The Buddha explains:

But when, Kālāmas, you know for yourselves: ‘These things are unwholesome; these things are blameworthy; these things are censured by the wise; these things, if accepted and undertaken, lead to harm and suffering,’ then you should abandon them.

‘When you know for yourselves…’, but what does that mean? How do you know for yourself? The Buddha gives the example of ‘unwholesome’ and ‘blameworthy’ actions, which lead to harm and suffering. Later he goes into this in more detail. He asks the Kālāmas a question: if you act from greed, what will be the result? They reply, ‘Suffering.’ He continues: if you act from hatred and ignorance, what will be the result? ‘Suffering’ again. How do they know this? Firstly, from their own experience, but also because actions proceeding from greed, hatred and delusion are censured by the wise. He seems to be saying that the Kālāmas can rely not just on their own experience but also on what ‘the wise’ say.

You might ask how that is different from two of the things the Buddha said they could not rely on:‘the seeming competence of a speaker’ and ‘the thought: “The ascetic is our guru”’. I think the answer lies in the difference between ‘extrinsic authority’ and ‘intrinsic authority’. Extrinsic authority is conferred upon someone from an external source. In the Christian tradition, for instance, someone who holds an ecclesiastical position, such as that of a bishop, has extrinsic authority. A bishop can ordinarily count on the respect of members of his church because of his office. But while a bishop may be a good and wise person, it is not too controversial to assert this is not always the case. It would be unwise for a Christian to rely on whatever bishops say just because they are bishops. Intrinsic authority, on the other hand, is the natural authority certain individuals have simply by virtue of who they are, an authority you see for yourself as you get to know them. Church members who happen to know a bishop well might thereby come to the conclusion that he is indeed good and wise. They would thus consider him to be a bishop because he is good and wise, and not good and wise because he is a bishop.

So, according to the Buddha, we can only go by our experience and by what we learn from others whom we know (by direct acquaintance) to be wise. That may sound straightforward, but on further investigation it turns out to be a little more complicated. The problem with going by our own experience is that we don’t just have experience: we interpret our experience, and our interpretation is conditioned by our opinions. For instance, a friend of mine who believes the world is warming dangerously told me in an email that she knows as much from her own experience. She is about the same age as me, maybe a little younger, so she was probably born in the late 1950s. According to NASA’s Goddard institute, the Earth’s average global temperature has risen by 0.97 degrees centigrade since 1960.3 Is it really possible for someone to notice such a small increase over sixty years? It seems more likely that my friend has interpreted her experience in line with her belief. Perhaps this is the reason the Buddha recommends listening to the wise: if you do misinterpret your experience, they may be able to point that out.

The Blind Men and the Elephant

Another problem with relying on your own experience is that experience is by nature personal, partial and particular, and it may not be sufficient to give you general and universal truths about the world. In another sutta, some of the Buddha’s disciples describe to him the numerous bitter philosophical disputes that are going on among various types of full-time religious practitioners – ascetics, brahmins, and so on:

The world is eternal – this alone is the truth, all else is foolish… The world is not eternal – this alone is the truth, all else is foolish… The world is finite/infinite… That which is soul, that is also the body… The soul is one thing, the body is another thing… The individual exists/does not exist after death…The individual exists and does not exist after death… The individual neither exists nor does not exist after death – this alone is the truth, all else is foolish.

They lived contending, quarrelling, disputing, attacking each other with sharp tongues, saying: ‘Such is true, such is not true; such is not true, such is true.’4

The disciples’ account of these controversies prompts the Buddha to comment. He offers the following parable. A king asks his ministers to find all those blind from birth and bring them to him. Once gathered together, they are placed around an elephant and told to touch whichever part of the elephant they are nearest. Some touch only its head, others only the ear, or the tusk, or the trunk, and so on. They are then asked to tell the king what the elephant is. Those who have touched the head say it is like a pot, those who have touched the ear say it is like a winnowing fan, and so on. By the end of the exercise, the elephant has been variously described as like a plough-share, a plough-pole, a store-house, a pillar, a mortar, a pestle, or a broom. The blind men then begin arguing amongst themselves, saying, ‘Such is an elephant, such is not an elephant; such is not an elephant, such is an elephant.’

The point is that the blind men were all going by their experience, but they were all misinterpreting it. Not only that. Each one of them had touched just one part of the elephant, and had generalised from that single part. If they had been allowed to walk around and touch the whole elephant, they would each have said that it was like a pot, but also like a winnowing fan, a plough-share, a plough-pole, and so on. By the end of the exercise, they would probably have been somewhat puzzled: ‘If it’s like all of those things, then what can it be?’ Or they might have listened to what the others said and taken that seriously, rather than contradicting and arguing with one another. Then they could have collectively posed the question: ‘What can it be?’ Their different experiences might have contributed to a greater understanding, or at least to a humble agreement that certainty was not available, rather than a futile and bitter dispute.

The blind men obviously represent the ascetics and brahmins who bitterly dispute philosophical questions. Perhaps the most significant part of this discourse is the sentence: ‘This alone is the truth, all else is foolish,’ which each of them repeats at the end of every metaphysical assertion that he makes. Of course, none of those assertions can be proven, but the ascetics and brahmins are convinced of their truth. We will return to this point later.

The ‘elephant’ in the story symbolises the metaphysical problems that the ascetics and brahmins disputed over, but it could equally be a symbol of any social or political problem today. Any such problem is likely to be complex, so we should be careful not to take hold of just one part of it, and imagine that by doing so we can ‘solve’ the problem. Yet this is what ideologies often cause us to do. They make us attribute the problems and faults of society to one cause, and assert that once that cause has been eliminated, an ideal society, or something much closer to one, will emerge.

As experience is not completely trustworthy, the Buddha offers the Kālāmas another condition: listen to what the wise say. But there are also problems with this. Firstly, you may not know anyone who is wise! Or if you do, they may only be fairly wise, or they may not be wise all of the time. Even wise people make mistakes. Or you may know a few people who seem very wise, but disagree with one another about important things.

There is yet another complication with the Buddha’s teaching to the Kālāmas. It would be easy to assume that the Buddha was telling them that they could not rely on that list of ten things at all. But this is contradicted by what he says in another sutta (which we will look at presently). This means that the Buddha didn’t give the Kālāmas two absolute opposites: ten things they can’t rely on at all, and two things they can rely on absolutely. He didn’t offer certainty, but probability. In effect, he said that if you look at your own experience and that of anyone you know who is to some extent wise, you will be in a better position to make wise choices.

Things that Could Turn Out to be True or False

Let’s take a look at that other sutta now.5 In this one the Buddha is talking with some brahmins, including a precocious young student called Kāpaṭhika. When an opportunity arises to address the Buddha, Kāpaṭhika says that, according to the ancient brahmanic hymns, that have come down to them through oral transmission and the scriptural collections, the brahmins have come to the definite conclusion that ‘Only this is true, anything else is wrong.’ Kāpaṭhika asks the Buddha what he thinks about this.

Instead of answering, the Buddha asks a counter-question: does Kāpaṭhika know a single brahmin who claims to know and see this truth? Kāpaṭhika has to admit that he does not. The Buddha then asks whether Kāpaṭhika knows of a single brahmin teacher, going back as far as seven generations, who ever made such a claim. Again, Kāpaṭhika answers in the negative. The Buddha goes further. He asks about the composers of the ancient hymns: did they say that they knew and saw this truth? Kāpaṭhika replies in the negative once again. The Buddha says that in that case, the brahmins’ faith in their teachings turns out to be groundless. It is rather like a file of blind men who are holding on to each other. The first doesn’t see, nor does the second, nor the last. Consequently, any trust each blind man might repose in those preceding him in the file can only be misplaced.

Here again the Buddha uses the metaphor of blindness. This is significant. In his teaching he generally used the sense of sight as a metaphor for direct, experiential knowledge (as we do, for instance, with the word ‘insight’). Notice that he asks Kāpaṭhika if he knows anyone who ‘knows and sees’ the truth that the brahmins proclaim. To ‘know’ something is to understand it conceptually – to fit it

into an existing conceptual structure. On the other hand, to ‘see’ it is to have a direct, experiential apprehension of it – one that simultaneously demands and produces a creative flexibility in the whole conceptual structure. The Buddha often used a distinctive phrase to denote wisdom or insight: ‘knowing and seeing things as they really are’.

Kāpaṭhika then protests that the brahmins don’t ‘honour this’ (i.e. adhere to their notions of truth) only out of faith, but also ‘as an oral tradition’. For us, this might not seem to add anything important, but the distinction seems to be between faith as an individual feeling of trust and the collective confidence that a traditional culture reposes in beliefs handed down over generations. The Buddha replies:

There are five things that can be seen to turn out in two different ways. What five? Belief, preference, oral tradition, reasoned consideration, and a reflective acceptance of a view.

Each of these can ‘turn out in two different ways’, that is, you may have faith in something, which may turn out to be true, or it may turn out to be ‘empty, hollow, and false’. Conversely, you may have no faith at all in something, yet it may turn out to be true nevertheless. And the same applies to the other four things. I’m going to look more closely at these five things in order to clarify what the Buddha meant.

Belief

Belief is a translation of saddhā, which comes from a root meaning ‘to place the heart upon’. It is more usually translated as faith, and can also be rendered as trust, confidence, or conviction. In the present context, however, ‘belief’ is probably the best word, defined as ‘an acceptance that something exists or is true, especially one without proof.’ It is important to note that the Buddha doesn’t dismiss Kāpaṭhika’s belief out of hand. Rather, he points out that it may be true, or it may be false. In fact, the Buddha spoke very approvingly about faith at other times. Later in this sutta, for instance, he talks about the desirability of finding a teacher and then scrutinising him for any signs of greed, hatred, or delusion. If you find none, you can ‘place faith in him’. In this instance, of course, the faith that you might place in such a teacher has some rational grounds because, as far as you’ve been able to ascertain, he has no greed, hatred, or delusion, the absence of which is considered to be characteristic of a wise person. In such a case your faith would not be ‘blind’, but partially sighted.

What the Buddha is warning against here is not belief, but mistaking belief for fact. The Merriam-Webster dictionary defines fundamentalism as ‘a movement or attitude stressing strict and literal adherence to a set of basic principles’. Other dictionaries have similar definitions, but I think an important characteristic of fundamentalism is that it takes its objects of faith as facts. This of course makes it very difficult to have a discussion with fundamentalists, because if you challenge them with the question ‘How do you know this?’ the response is likely to be ‘Because I know,’ or ‘Because it’s in the Holy Book.’ For the fundamentalist, belief is truth.

Reliance on belief is not confined to religious people. It’s a similar case with ideologues – those who believe a certain socio-political doctrine to be true, but with very little evidence for its veracity, or even despite plenty of evidence for its falsehood. Many intelligent people believed in communism in the first half of the twentieth century, and they continued to believe in it even when news of the brutal reality of communist regimes started coming in. (This is an example of belief perseverance, when beliefs persist after the evidence for them is shown to be false.)

Adherents of contemporary ideologies often have similar characteristics to religious fundamentalists: an insistence that what they believe is the truth, intolerance of anyone who voices disagreement, an unshakable conviction that they are on the side of the good (are even ‘saving the world’) and those who oppose them are on the side of evil. The net effect of all these things is often hatred and demonisation of anyone who dares to dissent from their chosen creed. Such dogmatic intolerance can of course be found at both ends of the political spectrum, but at the moment, at least in the USA and the UK, it is most conspicuous in the form of ‘progressive’ politics. One can see it in many climate change activists, ‘social justice’ campaigners, and contemporary ‘anti-racists’.

Another non-religious belief is that of materialism or physicalism – the belief that nothing exists but matter. Materialists like to think that their view is based on reason and hard scientific facts. They criticise those who believe in something beyond the material world, but they fail to see that their own view is in fact no more than a belief. The idea that nothing exists outside of what we can perceive (or logically infer from our perceptions) is not a provable fact – how could it be proven one way or the other? To believe in an afterlife, for instance, is no more irrational than the belief that when you die you are completely snuffed out.

Preference

‘Preference’ is ruchi, which can also be translated as ‘inclination’, ‘liking’, or ‘pleasure’. It may please us to think an idea is true. We like certain ideas because they confirm our already existing opinions. (This is known in western psychology as confirmation bias, myside bias or congeniality bias.) For instance, if you are politically right-wing, you will enjoy reading any news that supports right-wing opinions, and of course, you will dislike reading news that challenges them. Because of this you will tend to read or watch right-wing media outlets. The same is true if you happen to be left-wing, of course. Nowadays, our preferences are amplified by the internet. ‘Filter bubbles’ and ‘algorithmic editing’ display to us only information we are likely to agree with, while excluding opposing views.

We also have a tendency to slip from simply liking news that supports our opinions to exclusively believing such news and disbelieving the rest. As Craig Mazin, the writer and creator of HBO’s drama series Chernobyl said:

We live in a time where people seem to be re-embracing the corrosive notion that what we want to be true is more important than what is true.

This is all interesting from the Buddhist point of view, because in Buddhism ignorance (avidyā) is understood to be volitional. It is not simply and innocently not knowing something, but actively not wanting to know, and not allowing yourself to know. Ignorance is ignore-ance.

What Others Say

‘Oral tradition’ is a translation of anussava, which also means ‘report’, ‘repeated hearing’, and ‘tradition’ in general. The last of these is defined as ‘the transmission of customs or beliefs from generation to generation.’ This is what Kāpaṭhika relies on, and what the Buddha criticises (but does not altogether condemn). Kāpaṭhika’s tradition might hold some important truths, or it might not; probably it holds some truths and some falsehoods. The Buddha criticises Kāpaṭhika not for having faith in his tradition, but for thinking that it alone is true, and all else is wrong.

But let us explore the other meanings of anussava: ‘report’ or ‘repeated hearing’. In our modern world, these are more relevant than what Kāpaṭhika means by ‘oral tradition’. Suppose that an idea becomes very popular, and is repeated frequently in many contexts – in conversations, on social media, in newspapers and TV news. Because it is repeated so often, by so many people, we tend to assume it is true. The weakness of this is that most of those people have – just like us – simply assumed that others have come to independent and reliable judgements. This is called an information cascade. As Cass R. Sunstein points out in his book Conformity:

Sometimes scientists, lawyers, and other academics sign petitions or statements, suggesting that hundreds and even thousands of people share a belief or an opinion. The sheer number of signatures can be extremely impressive. But it is perhaps less so if we consider the likelihood that most signatories lack reliable information on the issue in question and are simply following the apparently reliable but uninformative judgement of numerous others.6

Sunstein describes how an information cascade originates, revealing how some scientists, lawyers and academics can be persuaded to sign a letter without having independent and reliable information on the matter. Simplifying his example, let us suppose that doctors are in discussion about whether or not to prescribe a certain drug, which though having some benefits also carries some risks. Individual doctors have some knowledge of the drug from their own experience, but they also care about the judgements of others. (This concern about other doctors’ judgements is not necessarily mere groupthink; it is akin to the Buddha’s advice to the Kālāmas to ‘go by’ what the wise say.) Doctor A thinks the benefits outweigh the risks and decides to prescribe the drug. Doctor B is not sure, but because doctor A has decided to prescribe, follows A’s judgement. Doctor C’s own information suggests that the risk is actually high, perhaps too high to prescribe safely. But not being a very confident person, Doctor C prescribes because doctors A and B apparently consider it safe to do so. There is now a cascade. Subsequent doctors, to the extent that they know what other doctors are doing, will probably do just what C did, and from there the information cascade will spread and grow in power.

Sunstein does not consider information cascades to be necessarily bad. After all, we don’t always have the time, energy, or resources to investigate every question for ourselves, so it is reasonable to follow what others say if they seem well qualified to say it. The problem is that some cascades begin with a mistake. For example, perhaps doctor C’s own information – that the risks of taking the drug outweigh the benefits – was correct, but he was afraid to go against doctors A and B, either because he lacked the confidence or because he feared his reputation might suffer if he went against their judgement. Then you have a potential cascade of misinformation (but only a potential cascade because doctor D might dissent, and her reputation may be such that her voice carries more weight than doctors A, B and C).

An information cascade is often given credibility by ‘experts’, whom we could regard as ‘the wise’ in their particular field. However, experts often disagree with one another, leaving us uncertain, and that uncertainty would be, as the Buddha said to the Kālāmas, appropriate. Another problem is that those experts who disagree with the information in the cascade usually do not receive as much attention as those who agree (some of whom may have started the cascade). I am writing this article in the UK during the second lockdown in response to Covid-19. The government says it is following ‘the science’, but there is considerable disagreement amongst the scientists as to the best way to deal with the crisis. In actuality, the government is following the advice of some scientists, and not following the advice of others. Obviously, the government has to make a decision about which scientists to trust, and perhaps it has made the right decision. But when it claims to be following ‘the science’, it gives the impression that there is only one scientific view.

Another problem is that experts in particular fields sometimes express opinions in fields outside their expertise. Of course, they are entitled to those opinions, but some experts are intoxicated by their expertise, and act as if they were experts in other areas too. Because their opinions are respected in their own field, they may be taken too seriously in other fields. An evolutionary biologist, for instance, is not an expert in philosophy. Consequently, there is no reason to take the biologist’s philosophical opinions any more seriously than those of a taxi driver, a fisherman, or a nurse. But experts tend to be confident, and numerous studies have shown that those who are confident are much more likely to be believed, even though they do not have any more information than those who are less confident.7

Thinking

It remains to consider the last two items in the list of things (given to Kāpaṭhika by the Buddha) that ‘can turn out in two different ways’. I will take these two items together because they are both concerned with forming an opinion through thinking. The first is ‘reasoned consideration’ or ‘examination of reasons’ (ākāraparivitakko). The second is ‘reflective acceptance of a view’ (diṭṭhinijjhānakkhanti). To put it simply, in the first of these you think about something; in the second you think about someone else’s opinion.

‘Reasoned consideration’ is an immensely valuable skill, but it is not nearly as common as is supposed. Clear and systematic thinking is a hard thing to do. When people say that they ‘think for themselves’, they usually mean that they feel free to adopt whatever notions they want from the common pool of ideas. But those ideas are other people’s, not their own. While this in itself is a good thing – an aspect of freedom – it is not the same as really thinking something through and coming to an independent conclusion.

Even when you think something through as thoroughly and independently as you are able, you could still come to a wrong conclusion. There are three reasons why. Firstly, your thinking will be based on the three factors listed above: your beliefs, your preference for certain views, and the information you have received from other people. If any of these are wrong, then your conclusions will be wrong too. Secondly, suppose that all three elements in your mental processes (beliefs, preferences, and information received) happen to be true. You may still come to a wrong conclusion because of a logical error in your thinking, like a cook who has the right ingredients but lacks the skill to prepare a good meal. Thirdly, even if the three elements all happen to be true, and you have made no logical errors, you are still not in a position to say ‘this is true, everything else is wrong’. Your analysis might be correct as far as it goes, and indeed very helpful, but still stop short of including all possibilities.

The Buddha taught that there are ‘wrong’ views that lead to suffering and limitation, and ‘right’ views that lead to happiness and freedom. Right views are cognitive approximations to the way things really are, but they are only approximations. Such views can be called ‘right’ only because with their aid we can eventually arrive at the direct experience of what they point to. That is the meaning of the famous Buddhist parable of the raft. If you have to cross a body of water in a wilderness, you may need a raft to get safely to the other shore. But once you are across, you will get off the raft and leave it on the shore. It would be absurd to encumber yourself with it for your onward journey over land.

But even before we get to the other shore, we should not cling too tightly to the raft. It might, for example, be easier to wade once we reach the shallows of the far shore. In one sutta, speaking about a particular ‘right view’, the Buddha tells his disciples that they should not ‘adhere to it, cherish it, treasure it, or treat it as a possession.’8 The way that you hold a view is as important as the view itself. If you treat it as a possession, for instance, you will be unwilling to let go of it in order to have direct experience.

A ‘parable’ of my own may make this point clearer. Suppose you are going to travel to a foreign country. You have heard it said that the people there do not like people from your country, and although they might seem friendly, that will only be a pretence in order to cheat you. But further suppose that this account, though rooted in somebody’s experience and potentially of value, is mostly out of date or exaggerated. If you are open to experience, you will quickly discover the truth, and easily discard or modify the view. But if you hold tightly to that view when you get to the foreign country, you will disregard your experience; you will ‘see’ the locals as invariably hostile and insincere, and rebuff any friendly overtures they make. In this way, your view will condition and limit your experience. And because you hold back from the people to protect yourself, they will hold back from you because you seem unfriendly.

Protecting the Truth

We have seen that whatever conclusions we may have come to – whether through belief, inclination, repeated hearing, or thinking – could be true. It is not that we can’t rely on them at all. After all, we can’t know everything for ourselves. We can’t help relying on other people for much of our information, and relying on our ability, however limited, to think logically. But we should not forget that our conclusions may be partial or even false. We must always keep sight of the possibility that we have got it wrong.

This is why the Buddha says,

Under these conditions it is not proper for a wise person who protects truth to come to the definite conclusion: ‘Only this is true, anything else is wrong.’9

We can best protect the truth by admitting we don’t own it. We may have an opinion, but we should not say categorically that it is the truth. This is a difficult position to hold because we human beings have a strong desire for certainty. Uncertainty or ambiguity are unpleasant, making us crave what social psychologist Arie Kruglanski calls ‘cognitive closure’ – the ‘desire for a firm answer to a question, and an aversion toward ambiguity’. Kruglanski has identified two stages of cognitive closure: ‘seizing’ and ‘freezing’. First, we seize any information we can, often without taking the time to verify it. Then we ‘freeze’ that knowledge in an attempt to gain closure: that is, to make it permanent, which gives us a certain confidence. This is similar to what the Buddha called ‘adhering to a view’. According to Kruglanski, there is another factor that kicks in here: if we have made our view public, we then feel that we have to maintain that view in order to appear consistent. To give it up would be to lose esteem, at least among the people with whom – on the basis of the view – we have chosen to associate. In short, we feel social pressure to remain attached to a view.

To honestly admit that while you have an opinion, you don’t actually know the truth, is to stay in the realm of uncertainty. That is uncomfortable. The Buddha told Kāpaṭhika that it is not proper for a wise person to come to a definite conclusion when they don’t have direct access to that truth, when they only have an opinion. ‘Wise’ here is a translation of viññu, the same word the Buddha uses when he advises the Kālāmas that they should rely on what ‘the wise’ say. This suggests that when the Buddha referred to the wise, he didn’t mean experts – people who have accumulated masses of information. Perhaps he simply meant those who are able to distinguish fact and opinion, and to remain in the uncomfortable state of uncertainty until the truth is known.

I’ve noticed a tendency in myself to slide towards certainty when I lack the evidence necessary for it. For instance, I think there are good reasons to doubt the popularly accepted narrative that the world is warming dangerously, and that this is due to human caused CO2 emissions. While I think there are good reasons to doubt it, I am not in a position to say that it is definitely wrong. In my more reflective moments I maintain this position, but there are times when I shift to a more definite one – to thinking and saying that the popular narrative is definitely wrong.

I suppose this happens simply because of my human need for certainty, but I suspect it also happens because of my occasional annoyance with those who are certain that they know the world is warming dangerously, when actually they are in the same position as I am – they are not in a position to know. And it is this unjustified certainty that leads to polarisation – there’s a temptation to meet certainty with certainty. If we can keep in mind the distinction between what we know and what we don’t, between knowledge and opinion, then we could have much more fruitful discussions – rather like the discussions the blind men could have had about the elephant had they been less certain of what they thought it was, and more willing to listen to others opinions.

Eel Wrigglers

By this point, you may have formed the impression that the Buddha advocated a hard scepticism – the view that definite knowledge is impossible, and therefore we shouldn’t commit ourselves to any ideals or principles. Or perhaps you fear that the problem is that

The best lack all conviction, while the worst

Are full of passionate intensity.10

But as well as being critical of those who asserted, ‘Only this is true, anything else is wrong,’ the Buddha was equally critical of those he called equivocators. (The literal translation of the Pali word is ‘eel wrigglers’. The Buddha lived in a largely pre-literate society, in which language tended to be concrete rather than abstract. Everyone would have understood this image immediately: when caught, an eel would wriggle in its attempt to escape.) An equivocator is someone who will not commit to a position in a debate. When asked for their opinion, they ‘wriggle’ in the hope of escaping censure from either side. The Buddha said of them:

They think: ‘I don’t truly understand what is good and what is bad. If I were to declare that something was good or bad, I might be wrong. That would be stressful for me, and that stress would be an obstacle.’ So, from fear and disgust with false speech they avoid stating whether something is good or bad.

Whenever they’re asked a question, they resort to evasiveness and equivocation: ‘I don’t say it’s like this. I don’t say it’s like that. I don’t say it’s otherwise. I don’t say it’s not so. And I don’t deny it’s not so.’11

Accepting the limitations of our knowledge and the unreliability of our opinions doesn’t mean that we should hold no opinions and do nothing. It doesn’t absolve us of the responsibility of making judgements and acting on them. It just means that we always have to act with the awareness that we could be making a mistake. In fact, very often we only realise our mistake once we have acted. If we never commit to an opinion, and therefore never base any actions firmly upon one, we deprive ourselves of the opportunity to discover which of our opinions are close to the truth and which are far from it.

In one important respect, the equivocators are very similar to those who are certain that they have the truth: their refusal to learn. The latter do so by imagining that they already know the truth, the former by refusing to commit to an opinion, and then testing it against reason and experience.

Notice the reason why the equivocators don’t commit to a position: they are less concerned about the truth than the indignity of being caught in the wrong. The poet Dante called this il gran rifiuto – ‘the great refusal’ to commit oneself, which he considered an act of cowardice. In his great poem Inferno he places the equivocators as wretchedly marooned on the shore of Acheron, the river across which Charon ferries the lost souls to Hell.

This miserable way

is taken by the sorry souls of those

who lived without disgrace and without praise.

They now commingle with the coward angels,

the company of those who were not rebels

nor faithful to their God, but stood apart.

The heavens, that their beauty not be lessened,

have cast them out, nor will deep Hell receive them—

even the wicked cannot glory in them.12

I’m going to finish this article with another Christian writer. Thomas Aquinas was a man of great conviction but, according to Denys Turner in his book on Aquinas, he was also an exemplar of the capacity to hold an opinion lightly and let go of it when it was shown to be wrong:

His contemporaries most frequently commented on Thomas’s humility, … that peculiarly difficult form of vulnerability, which consists in being entirely open to the discovery of truth, especially to the truth about oneself. One might say, likewise, that what humility is to the moral life, lucidity is to the intellectual – an openness to contestation, the refusal to hide behind the opacity of the obscure, a vulnerability to refutation to which one is open simply as a result of being clear enough to be seen, if wrong, to be wrong.13

Aquinas was of course convinced in the existence of God. I think he was mistaken about this. But I only think that, I don’t know it.

Footnotes

- https://suttacentral.net/an3.65/en/bodhi Translation Bhikkhu Bodhi.

- Knowledge and Truth in Early Buddhism: An Examination of the Kālāma Sutta and Related Pāli Canonical Texts,Dharmacārī Nāgapriya. Published in The Western Buddhist Review, Volume 3 (2001).

- https://climate.nasa.gov/vital-signs/global-temperature/

- Udana 6.4. https://suttacentral.net/ud6.4/en/anandajoti Translation Anandajoti, slightly modified.

- Caṅkī sutta https://suttacentral.net/mn95/en/bodhi Translation Bhikkhu Bodhi.

- Conformity, Cass R. Sunstein, New York University Press, 2019. Kindle edition.

- https://www.sicotests.com/psyarticle.asp?id=136

- Mahatanhasankhaya Sutta, (The Sutta on Craving), Majjhima Nikaya 38. Translation Bhikkhu Bodhi.

- ‘Protects’ is a translation of rakkhati, which can also mean shelter, save, or preserve.

- The Second Coming, W.B. Yeats.

- Translation Bhikkhu Sujato.

- Inferno, Canto 3, 34-42. Translation Allen Mandelbaum.

- Thomas Aquinas, by Denys Turner, Page 39.